Lecture Video and Recap: Ziyu Yao on 'Towards Enhancing the Utilization of Large Language Models for Humans'

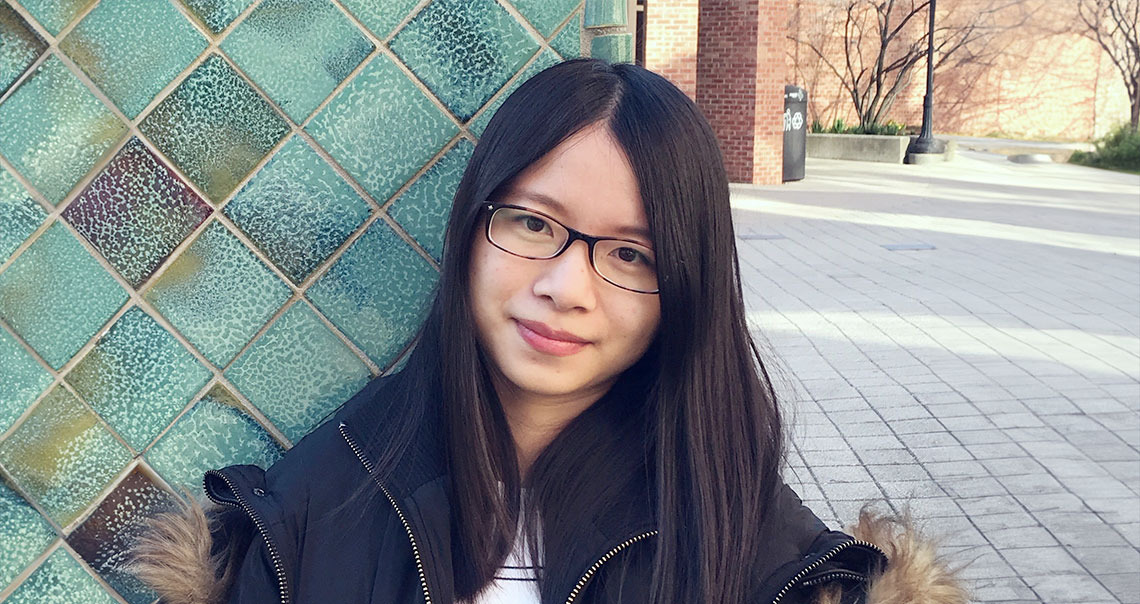

Ziyu Yao, Assistant Professor of Computer Science, George Mason University

Lecture Video

Lecture Recap

Colloquium held March 14, 2024.

Large language models, or LLMs, have rapidly transformed the landscape of language technologies. Today, they have become the backbones of various applications and been used by millions of users. In this talk, Ziyu Yao presents two projects in her group towards further enhancing the utilization of LLMs. In the first project, the group looks into the "cost efficiency" of LLMs, where they investigate how to save the monetary costs for users querying LLMs through APIs. Their work assumes a "cascade" of LLMs, following the intuition that easy queries can be handled by a relatively weaker, but also cheaper, LLM, whereas only the challenging queries necessitate the stronger yet more costly LLM. Focusing on LLM reasoning tasks, they propose to leverage a mixture of thought representations (MoT) to decide when to query the stronger LLM. Their method is shown to yield comparable task performance but consumes only 40% of the cost as using only the stronger LLM (GPT-4). In the second project, Yao's group turns to the "accessibility" of LLMs, where they study how to optimize the user prompts to LLMs in a zero-shot setting, so as to further democratize the human access to this advanced technique. To this end, they propose an approach that iteratively rewrites human prompts for individual task instances following an innovative manner of "LLM in the loop". Their approach is shown to significantly outperform both naive zero-shot approaches and a strong baseline which refines the LLM outputs rather than its input prompts. Finally, Yao concludes the talk by presenting other research in her group towards interpreting LLMs and applying them for social good.

About Ziyu Yao

Ziyu Yao is an assistant professor in the Department of Computer Science at George Mason University, where she co-leads the George Mason NLP group. She is also affiliated with the C4I & Cyber Center, Center for Advancing Human-Machine Partnership and Institute for Digital InnovAtion at GMU. She received her PhD in Computer Science and Engineering from Ohio State University in 2021. She has spent time interning at Microsoft Semantic Machines, Carnegie Mellon University, Microsoft Research, Fujitsu Lab of America and Tsinghua University. She works in natural language processing and artificial intelligence, particularly building natural language interfaces (e.g., question answering and dialogue systems) that can reliably assist humans in knowledge acquisition and task completion. Some specific topics include language and code, human-AI interaction and information intelligence with LLMs. She recently launched Gentopia-AI, an open-source platform for creating, evaluating and community-sharing augmented language model-based agents.